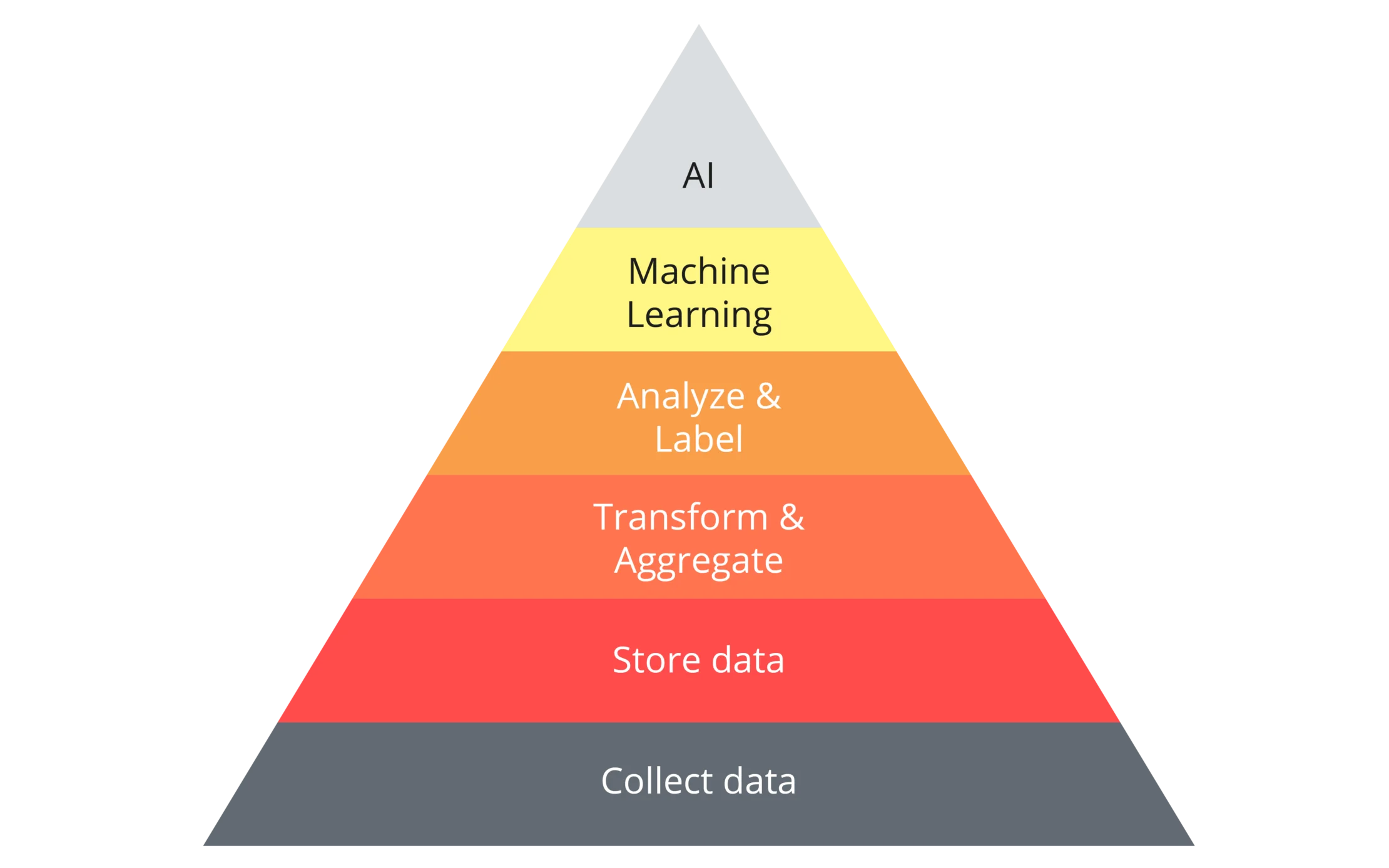

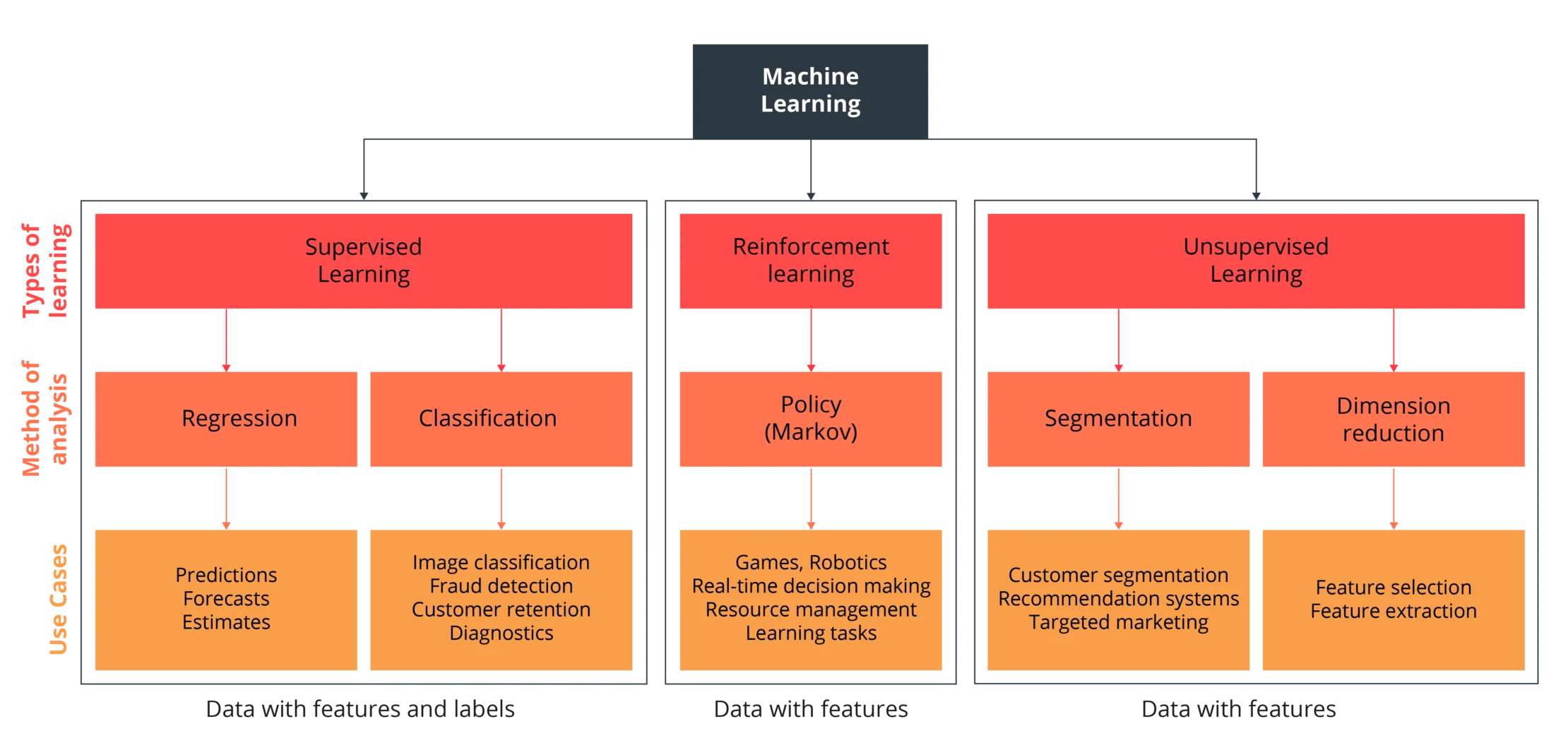

Supervised learning

In supervised learning, the data stock consists of a collection of previously tagged data. The data consists of features and labels. The goal of a monitored learning algorithm is to use the data to develop a model that receives a feature or a set of features and can predict the labels for new data records. Monitored learning is used in prediction and prognosis (regression), fraud detection (classification), risk assessment of investments, and calculation of the probability of failure of machines (predictive maintenance).

Unsupervised learning

In unsupervised learning, the algorithm uses a data stock that consists solely of features. In contrast to supervised learning, the data has no labels and therefore there are no target variables. The goal of unsupervised learning is to identify hidden patterns and similarities in the data. These patterns and similarities can be used to group data (segmentation) and to reduce the complexity of a data set (dimension reduction). By using these methods, data can be visualized better and interpreted more easily by users. In addition, the results of the algorithms can be used as features for further data science analyses. Classic use cases for unsupervised learning are customer segmentation, referral systems, and targeted marketing.

Reinforcement learning

In reinforcement learning, the machine (agent) is in a virtual or real environment. The agent can perform actions in this environment and is evaluated by a reward system and a cost function. In so doing, the agent learns a policy that maximizes its benefit. The machine therefore uses feedback from interaction with its environment to optimize future actions and prevent errors. Unlike supervised and unsupervised learning, the algorithm does not require sample data, but only an environment and an assessment function to optimize its actions. Reinforcement learning is applied in those areas where decision-making is sequential. Classic applications include computer games, robotics, resource management, and logistics.

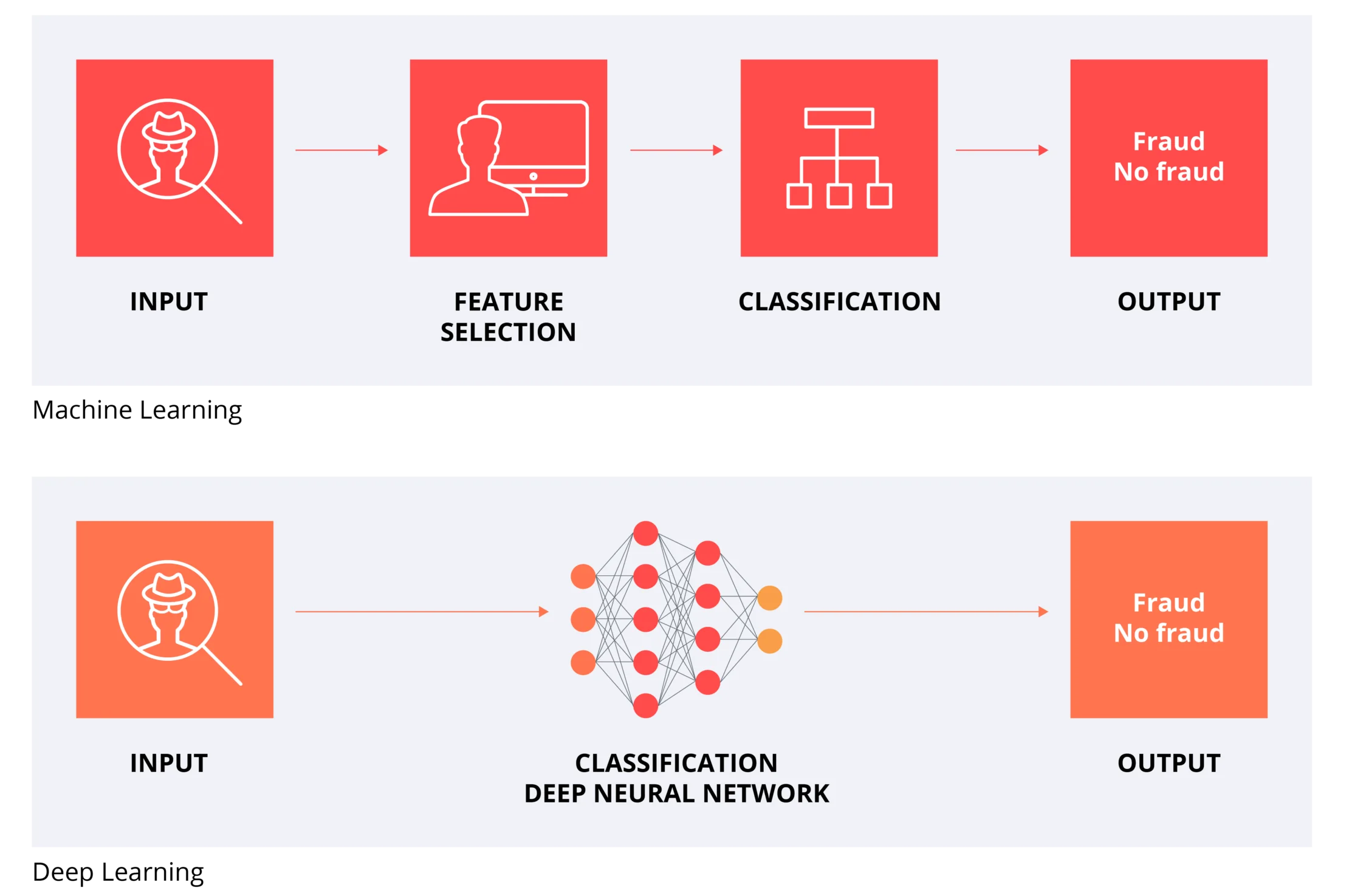

Deep learning

Deep learning is a subset of machine learning that uses artificial neural networks. Deep neural networks exist when the neural network has more than one intermediate layer. Neural networks are particularly suitable for complex applications and/or the field of unstructured data (Big Data). Deep learning is already being used in a variety of AI applications (for example in voice assistance, face recognition or autonomous driving), which leads to revolutionary and disruptive changes.